【AI达人特训营第三期】Conv2Former:一种ViT风格的卷积模块

时间:2025-08-06

-

-

真正的出租车驾驶大城市

- 类型:

- 大小:

- 语言:简体中文

- 评分:

- 查看详情

【AI达人特训营第三期】Conv2Former:一种ViT风格的卷积模块

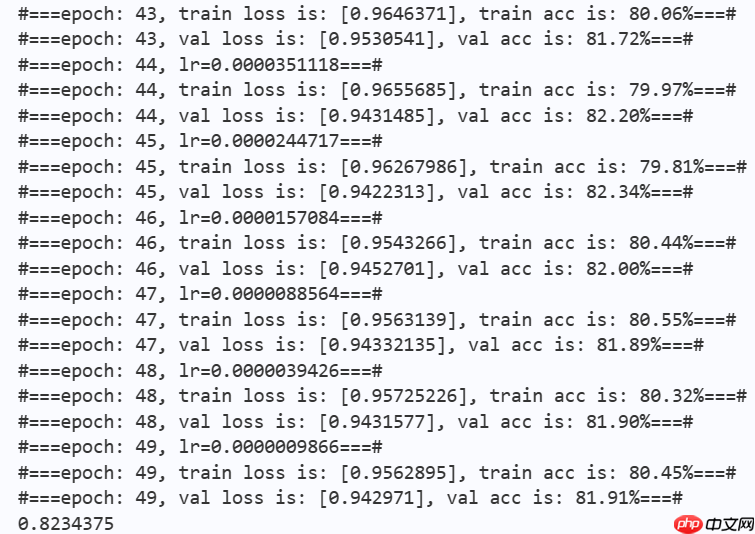

本文成功复现了Convormer模型,该模型采用Transformer风格的QKV结构,利用卷积生成权重进行加权处理,旨在平衡全局信息提取与计算开销。在CIFAR-据集上,使用参数尺寸{和深度为{的Convormer-N模型,在训练过程中经过epoch后,验证集准确率达到了。相较于Swin-T模型,Convormer-N在参数数量上降低了近,同时在准确性上也实现了显著提升,具体表现为准确率和参数。这些结果充分展现了Convormer设计上的优越性。

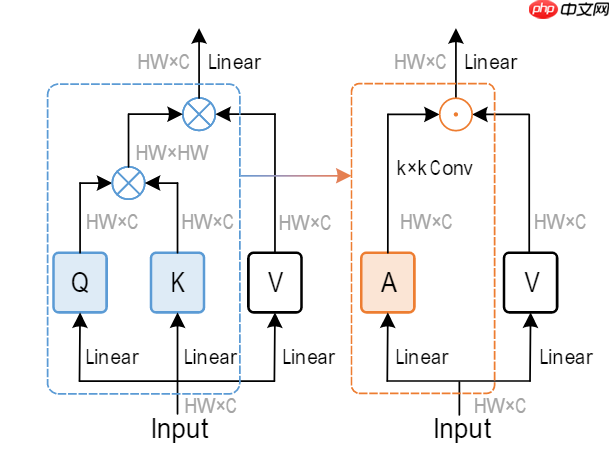

Conv2Former:一种transformer风格的卷积特征提取方式

1.摘要

近年来,大量卷积模型依赖于堆叠不同感受野的卷积和金字塔结构来提取特征,这导致它们往往忽视了全局信息的提取。直到Vision Transformer(ViT)的提出,才首次将transformer引入视觉领域,并在全局信息建模方面展现了出色的表现。然而,这一方法也对处理高分辨率图像产生了一定的计算开销。近期,ConvNeXt则在传统残差结构的基础上,采用了更为先进的训练技巧,显著提升了传统卷积模型的表现力,使其性能与ViT相匹敌。这引发了我们关于能否设计一种全新架构,在保留transformer全局特征提取能力的同时大幅降低计算开销的思考。在此基础上,Convormer应运而生。它采用了与transformer类似的QKV结构,并通过卷积生成权重来执行加权操作。这种创新的设计为我们进一步开发卷积模型提供了新的思路。通过这种方式,我们可以将复杂的计算和处理需求转移到更高效且可利用的图像特征上,从而大幅降低模型的整体开销。这一架构不仅在性能上展现了与ViT相媲美的能力,同时在计算效率方面也取得了显著进步。

![image.png] In []

!mkdir /home/aistudio/Conv2Former-libraries !pip install paddlex -t /home/aistudio/Conv2Former-libraries登录后复制 In []

import paddleimport numpy as npfrom paddle.vision.datasets import Cifar10from paddle.vision.transforms import Transposefrom paddle.io import Dataset, DataLoaderfrom paddle import nnimport paddle.nn.functional as Fimport paddle.vision.transforms as transformsimport os#import matplotlib.pyplot as plt#from matplotlib.pyplot import figureimport sys sys.path.append('/home/aistudio/Conv2Former-libraries')import paddlex登录后复制

一些训练tricks,labelsoomthing and droppath. In [5]

class LabelSmoothingCrossEntropy(nn.Layer): def __init__(self, smoothing=0.1): super().__init__() self.smoothing = smoothing def forward(self, pred, target): confidence = 1. - self.smoothing log_probs = F.log_softmax(pred, axis=-1) idx = paddle.stack([paddle.arange(log_probs.shape[0]), target], axis=1) nll_loss = paddle.gather_nd(-log_probs, index=idx) smooth_loss = paddle.mean(-log_probs, axis=-1) loss = confidence * nll_loss + self.smoothing * smooth_loss return loss.mean()登录后复制 In [6]

def drop_path(x, drop_prob=0.0, training=False): """ Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks). the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper... See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... """ if drop_prob == 0.0 or not training: return x keep_prob = paddle.to_tensor(1 - drop_prob) shape = (paddle.shape(x)[0],) + (1,) * (x.ndim - 1) random_tensor = keep_prob + paddle.rand(shape, dtype=x.dtype) random_tensor = paddle.floor(random_tensor) # binarize output = x.divide(keep_prob) * random_tensor return outputclass DropPath(nn.Layer): def __init__(self, drop_prob=None): super(DropPath, self).__init__() self.drop_prob = drop_prob def forward(self, x): return drop_path(x, self.drop_prob, self.training)登录后复制

2.数据载入及增强

- 在[中,作者使用了常见的数据增强方法(未完全复现):MixUp、CutMix、Stochastic Depth、Random Erasing、Label Smoothing、RandAug、Layer Scale

train_tfm = transforms.Compose([ transforms.Resize((32,32)), transforms.ColorJitter(brightness=0.2,contrast=0.2, saturation=0.2), paddlex.transforms.MixupImage(), #transforms.Cutmix(), transforms.RandomResizedCrop(32, scale=(0.6, 1.0)), transforms.RandomErasing(), transforms.RandomHorizontalFlip(0.5), transforms.RandomRotation(20), transforms.ToTensor(), transforms.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)), ]) test_tfm = transforms.Compose([ transforms.Resize((32,32)), transforms.ToTensor(), transforms.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)), ]) batch_size=256paddle.vision.set_image_backend('cv2')# 使用Cifar10数据集train_dataset = Cifar10(data_file='./data/cifar-10-python.tar.gz', mode='train', transform = train_tfm,) val_dataset = Cifar10(data_file='./data/cifar-10-python.tar.gz', mode='test',transform = test_tfm)print("train_dataset: %d" % len(train_dataset))print("val_dataset: %d" % len(val_dataset)) train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, drop_last=True, num_workers=2) val_loader = DataLoader(val_dataset, batch_size=batch_size, shuffle=False, drop_last=False, num_workers=2)登录后复制

train_dataset: 50000 val_dataset: 10000登录后复制

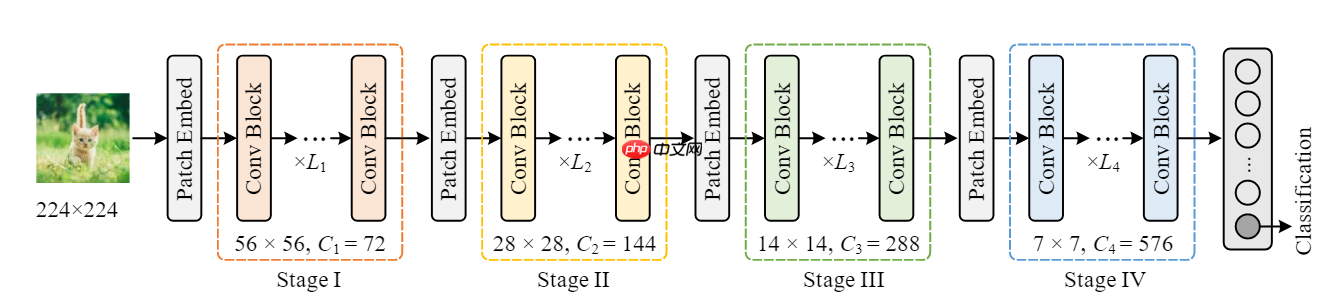

3.模型创建

- 1 Conv2Former模块创建

由于显存以及训练条件限制,我们将原文设计的224乘224的输入改为32乘32输入,并采用Conv2Former-N的模型参数进行堆叠,即{C1, C2, C3, C4}={64, 128, 256, 512};{L1, L2, L3, L4}={2, 2, 8, 2} In [8]

class MLP(nn.Layer): def __init__(self, dim, mlp_ratio=4, drop=0.,): super().__init__() self.norm = nn.LayerNorm(dim, epsilon=1e-6,) self.fc1 = nn.Conv2D(dim, dim * mlp_ratio, 1) self.pos = nn.Conv2D(dim * mlp_ratio, dim * mlp_ratio, 3, padding=1, groups=dim * mlp_ratio) self.fc2 = nn.Conv2D(dim * mlp_ratio, dim, 1) self.act = nn.GELU() self.drop = nn.Dropout(drop) def forward(self, x): B, C, H, W = x.shape x = self.norm(x.transpose([0, 2, 3, 1])).transpose([0, 3, 1, 2]) x = self.fc1(x) x = self.act(x) x = x + self.act(self.pos(x)) x = self.fc2(x) return x登录后复制 In [9]

class ConvMod(nn.Layer): def __init__(self, dim): super().__init__() self.norm = nn.LayerNorm(dim, epsilon=1e-6,) self.a = nn.Sequential( nn.Conv2D(dim, dim, 1), nn.GELU(), nn.Conv2D(dim, dim, 11, padding=5, groups=dim) ) self.v = nn.Conv2D(dim, dim, 1) self.proj = nn.Conv2D(dim, dim, 1) def forward(self, x): B, C, H, W = x.shape x = self.norm(x.transpose([0, 2, 3, 1])).transpose([0, 3, 1, 2]) a = self.a(x) x = a * self.v(x) x = self.proj(x) return x登录后复制

Convolutional Modulation作者在此采用了以大卷积核,通过实验发现,当卷积核进一步增大时,性能显著提升。最终,他们将卷积核大小设置为以这种设计赋予了模型更强的全局信息获取能力。参考文献 [

class Block(nn.Layer): def __init__(self, dim, mlp_ratio=4, drop=0., drop_path=0.,): super().__init__() self.attn = ConvMod(dim) self.mlp = MLP(dim, mlp_ratio, drop=drop) self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity() def forward(self, x): x = x + self.drop_path(self.attn(x)) x = x + self.drop_path(self.mlp(x)) return x登录后复制 In [11]

class BasicLayer(nn.Layer): def __init__(self, dim, depth, mlp_ratio=4., drop=0., drop_path=0.,downsample=True): super(BasicLayer, self).__init__() self.dim = dim self.drop_path = drop_path # build blocks self.blocks = nn.LayerList([ Block(dim=dim, mlp_ratio=mlp_ratio, drop=drop, drop_path=drop_path[i],) for i in range(depth) ]) # patch merging layer if downsample: self.downsample = nn.Sequential( nn.GroupNorm(num_groups=1, num_channels=dim), nn.Conv2D(dim, dim * 2, kernel_size=2, stride=2,bias_attr=False) ) else: self.downsample = None def forward(self, x): for blk in self.blocks: x = blk(x) if self.downsample is not None: x = self.downsample(x) return x登录后复制 In [12]

class Conv2Former(nn.Layer): def __init__(self, num_classes=10, depths=(2,2,8,2), dim=(64,128,256,512), mlp_ratio=2.,drop_rate=0., drop_path_rate=0.15, **kwargs): super().__init__() norm_layer = nn.LayerNorm self.num_classes = num_classes self.num_layers = len(depths) self.dim = dim self.mlp_ratio = mlp_ratio self.pos_drop = nn.Dropout(p=drop_rate) # stochastic depth decay rule dpr = [x.item() for x in paddle.linspace(0, drop_path_rate, sum(depths))] # build layers self.layers = nn.LayerList() for i_layer in range(self.num_layers): layer = BasicLayer(dim[i_layer], depth=depths[i_layer], mlp_ratio=self.mlp_ratio, drop=drop_rate, drop_path=dpr[sum(depths[:i_layer]):sum(depths[:i_layer + 1])], downsample=(i_layer < self.num_layers - 1), ) self.layers.append(layer) self.fc1 = nn.Conv2D(3, 64, 1) self.norm = norm_layer(512, epsilon=1e-6,) self.avgpool = nn.AdaptiveAvgPool2D(1) self.head = nn.Linear(512, num_classes) \ if num_classes > 0 else nn.Identity() self.apply(self._init_weights) def _init_weights(self, m): tn = nn.initializer.TruncatedNormal(std=.02) zeros = nn.initializer.Constant(0.) ones = nn.initializer.Constant(1.) if isinstance(m, nn.Linear): tn(m.weight) if isinstance(m, nn.Linear) and m.bias is not None: zeros(m.bias) elif isinstance(m, (nn.Conv1D, nn.Conv2D)): tn(m.weight) if m.bias is not None: zeros(m.bias) elif isinstance(m, (nn.LayerNorm, nn.GroupNorm)): zeros(m.bias) ones(m.weight) def forward_features(self, x): x = self.fc1(x) x = self.pos_drop(x) for layer in self.layers: x = layer(x) x = self.norm(x.transpose([0, 2, 3, 1])) x = x.transpose([0, 3, 1, 2]) x = self.avgpool(x) x = paddle.flatten(x, 1) return x def forward(self, x): x = self.forward_features(x) x = self.head(x) return x登录后复制 In [14]

#参数设置learning_rate = 0.001n_epochs = 50paddle.seed(42) np.random.seed(42) batch_size = 256work_path = './work/model'登录后复制 In []

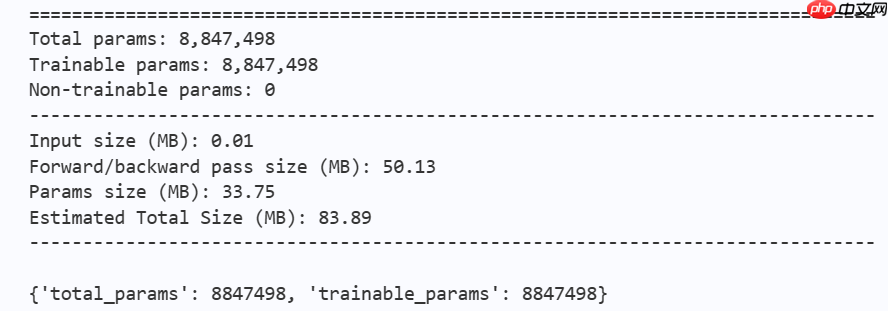

# conv2Former模型打印model = Conv2Former(num_classes=10, depths=(2,2,8,2),dim=(64,128,256,512), mlp_ratio=2,drop_path_rate=0.1) params_info=paddle.summary(model,input_size=(1, 3, 32, 32))print(params_info)登录后复制

In []

criterion = LabelSmoothingCrossEntropy() scheduler = paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=learning_rate, T_max=50000 // batch_size * n_epochs, verbose=False) optimizer = paddle.optimizer.Adam(parameters=model.parameters(), learning_rate=scheduler, weight_decay=1e-5) gate = 0.0threshold = 0.0best_acc = 0.0val_acc = 0.0loss_record = {'train': {'loss': [], 'iter': []}, 'val': {'loss': [], 'iter': []}} # for recording lossacc_record = {'train': {'acc': [], 'iter': []}, 'val': {'acc': [], 'iter': []}} # for recording accuracyloss_iter = 0acc_iter = 0for epoch in range(n_epochs): # ---------- Training set---------- model.train() train_num = 0.0 train_loss = 0.0 val_num = 0.0 val_loss = 0.0 accuracy_manager = paddle.metric.Accuracy() val_accuracy_manager = paddle.metric.Accuracy() print("#===epoch: {}, lr={:.10f}===#".format(epoch, optimizer.get_lr())) for batch_id, data in enumerate(train_loader): x_data, y_data = data labels = paddle.unsqueeze(y_data, axis=1) logits = model(x_data) loss = criterion(logits, y_data) acc = paddle.metric.accuracy(logits, labels) accuracy_manager.update(acc) if batch_id % 10 == 0: loss_record['train']['loss'].append(loss.numpy()) loss_record['train']['iter'].append(loss_iter) loss_iter += 1 loss.backward() optimizer.step() scheduler.step() optimizer.clear_grad() train_loss += loss train_num += len(y_data) total_train_loss = (train_loss / train_num) * batch_size train_acc = accuracy_manager.accumulate() acc_record['train']['acc'].append(train_acc) acc_record['train']['iter'].append(acc_iter) acc_iter += 1 # Print the information. print("#===epoch: {}, train loss is: {}, train acc is: {:2.2f}%===#".format(epoch, total_train_loss.numpy(), train_acc * 100)) # ---------- Validation ---------- model.eval() for batch_id, data in enumerate(val_loader): x_data, y_data = data labels = paddle.unsqueeze(y_data, axis=1) with paddle.no_grad(): logits = model(x_data) loss = criterion(logits, y_data) acc = paddle.metric.accuracy(logits, labels) val_accuracy_manager.update(acc) val_loss += loss val_num += len(y_data) total_val_loss = (val_loss / val_num) * batch_size loss_record['val']['loss'].append(total_val_loss.numpy()) loss_record['val']['iter'].append(loss_iter) val_acc = val_accuracy_manager.accumulate() acc_record['val']['acc'].append(val_acc) acc_record['val']['iter'].append(acc_iter) print( "#===epoch: {}, val loss is: {}, val acc is: {:2.2f}%===#".format(epoch, total_val_loss.numpy(), val_acc * 100)) # ===================save==================== if val_acc > best_acc: best_acc = val_acc paddle.save(model.state_dict(), os.path.join(work_path, 'best_model.pdparams')) paddle.save(optimizer.state_dict(), os.path.join(work_path, 'best_optimizer.pdopt'))print(best_acc) paddle.save(model.state_dict(), os.path.join(work_path, 'final_model.pdparams')) paddle.save(optimizer.state_dict(), os.path.join(work_path, 'final_optimizer.pdopt'))登录后复制

In []

## 4.结论与讨论登录后复制

- 1结论

本项目通过呈现Convormer论文中的网络结构,实现了在飞桨框架下的复现并初步训练。首次尝试未借助预训练模型时,在完成epoch的训练后,模型在验证集上的准确率显著提高至,并在Cifar-据集上取得了令人关注的表现。这一结果不仅展示了Convormer模块设计的优越性,也为transformer的可解释性和卷积模块的重新设计提供了新思路。参数量:Convormer-N: 准确性:验证集:

注:Swin-T实验结果来自浅析 Swin Transformer,模型为swin_tiny。

以上就是【AI达人特训营第三期】Conv2Former:一种ViT风格的卷积模块的详细内容,更多请关注其它相关文章!