基于飞桨框架2.1实现U-Net与PSPNet图像分割算法对路面分割

更新时间:2026-02-15 11:29:59

-

-

爱情和生活模拟rpg手机版

- 类型:体育竞技

- 大小:87.5mb

- 语言:简体中文

- 评分:

- 查看详情

基于飞桨框架2.1实现U-Net与PSPNet图像分割算法对路面分割

本文基于飞桨框架使用RTK数据集(包含地物)实现U-Net与PSPNet路面分割。介绍了数据集的准备、训练/测试集的定义以及构建了包括编码器解码器在内的U-Net及具有backbone等特征的PSPNet模型。在经过训练后的预测中,U-Net的准确率为,IOU值为,表现出色,优于PSPNet,并通过可视化展示了结果对比。

基于飞桨框架2.1实现U-Net与PSPNet图像分割算法对路面分割

使用数据集介绍不依赖于营教程提供的特定资源,我们当前使用的数据集是Road Traversing Knowledge (RTK) Dataset。以下是该数据集的详细信息:特点: 该数据集包含了由低成本相机(HP Webcam HD-拍摄的图像,包括各种路面类型:沥青变化、未铺装路面、不同路面类型的混合以及道路损坏情况如坑洞。标签对应表: 类别 序号背景 青路面 装路面 铺装路面 路标线 速带 眼 渠 丁 坑 洞 缝 如,样本图像示例如下:图背景( 图沥青路面( 图铺装路面( 图未铺装路面( 图道路标线( 图减速带( 图猫眼( 图沟渠( 图补丁( 图水坑( 图坑洞( 图裂缝(

在训练中使用围内的掩膜图像为标签。右图仅用于展示目的;此数据集包含地物类型,不包括背景。

一、数据集准备

In []

# 解压文件到数据集的文件夹!mkdir work/dataset !unzip -q data/data71331/RTK_Segmentation.zip -d work/dataset/ !unzip -q data/data71331/tests.zip -d work/dataset/登录后复制 In []

# 新建验证集的文件夹!mkdir work/dataset/val_frames !mkdir work/dataset/val_colors !mkdir work/dataset/val_masks登录后复制 In []

# 将数据随机抽50张移动到作为验证集import osimport shutilimport redef moveImgDir(color_dir, newcolor_dir, mask_dir, newmask_dir, frames_dir, newframes_dir): filenames = os.listdir(color_dir) filenames.sort() for index, filename in enumerate(filenames): src = os.path.join(color_dir,filename) dst = os.path.join(newcolor_dir,filename) shutil.move(src, dst) # colors 文件夹中的文件名多了GT,所以要去掉 new_filename = re.sub('GT', '', filename) src = os.path.join(mask_dir, new_filename) dst = os.path.join(newmask_dir, new_filename) shutil.move(src, dst) src = os.path.join(frames_dir, new_filename) dst = os.path.join(newframes_dir, new_filename) shutil.move(src, dst) if index == 50: breakmoveImgDir(r"work/dataset/colors", r"work/dataset/val_colors",r"work/dataset/masks", r"work/dataset/val_masks",r"work/dataset/frames", r"work/dataset/val_frames")登录后复制 In []

# 查看mask图像和color图像之间的标签映射, 并保存成json文件import osimport cv2import numpy as npimport reimport json labels = ['Background', 'Asphalt', 'Paved', 'Unpaved', 'Markings', 'Speed-Bump', 'Cats-Eye', 'Storm-Drain', 'Patch', 'Water-Puddle', 'Pothole', 'Cracks'] label_color_dict = {} mask_dir = r"work/dataset/masks"color_dir = r"work/dataset/colors"mask_names = [f for f in os.listdir(mask_dir) if f.endswith('png')] color_names = [f for f in os.listdir(color_dir) if f.endswith('png')]for index, label in enumerate(labels): if index>=8: index += 1 for color_name in color_names: color = cv2.imread(os.path.join(color_dir, color_name), -1) color = cv2.cvtColor(color, cv2.COLOR_BGR2RGB) mask_name = re.sub('GT', '', color_name) mask = cv2.imread(os.path.join(mask_dir, mask_name), -1) mask_color = color[np.where(mask == index)] if len(mask_color)!= 0: label_color_dict[label] = list(mask_color[0].astype(float)) breakwith open(r"work/dataset/mask2color.json", "w", encoding='utf-8') as f: # json.dump(dict_, f) # 写为一行 json.dump(label_color_dict, f, indent=2, sort_keys=True, ensure_ascii=False) # 写为多行登录后复制

二、数据集类定义(训练集、测试集)

1.数据转换

本节旨在展示如何对图像数据进行预处理,以增强训练过程中的泛化能力。我们将专注于数据变换部分,这是为了便于读取并准备训练数据时的调整。通过旋转、填充、中心裁剪以及标准化等操作,可以有效地增加数据多样性,并进一步提高模型在分割任务上的表现。实现代码将位于文件`work/Classdata_transform.py`中,提供了一系列实用的方法来处理图像数据。这些方法包括但不限于: - 数据变换:如旋转图像以避免过拟合。 - 填充空白边缘以确保数据的一致性。 - 中心裁剪技术用于获取更精确的特征提取。 - 标准化处理,即将像素值缩放到间的范围。通过这些步骤,我们不仅能够有效训练模型,还能显著提升其在实际应用中的表现。

2.训练集类与测试集类定义

在读取图像数据时进行转换操作时,对于训练集类的操作频率较高,而测试集类则需要额外的缩放和标准化处理。训练集类负责读取并返回经过转换的数据及其标签,数据和标签后缀均为.png格式;相比之下,测试集类除了提供数据和标签外,还包含相应的路径信息,便于后续进行可视化展示。

# 数据集类定义前置工作import osimport numpy as npimport cv2import paddlefrom paddle.io import Dataset, DataLoaderfrom work.Class3.data_transform import Compose, Normalize, RandomSacle, RandomFlip,ConvertDataType,Resize IMG_EXTENSIONS = ['.jpg', '.JPG', '.jpeg', '.JPEG', '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP']def is_image_file(filename): return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)def get_paths_from_images(path): """get image path list from image folder""" assert os.path.isdir(path), '{:s} is not a valid directory'.format(path) images = [] for dirpath, _, fnames in sorted(os.walk(path)): for fname in sorted(fnames): if is_image_file(fname): img_path = os.path.join(dirpath, fname) images.append(img_path) assert images, '{:s} has no valid image file'.format(path) return images登录后复制 In [3]

训练集类:BasicDataset,读取.png格式图像及其标签,并进行预处理。

# 测试集类定义class Basic_ValDataset(Dataset): ''' 需要读取数据并返回转换过的数据、标签以及图像数据的路径 ''' def __init__(self, image_folder, label_folder, size): super(Basic_ValDataset, self).__init__() self.image_folder = image_folder self.label_folder = label_folder self.path_Img = get_paths_from_images(image_folder) if label_folder is not None: self.path_Label = get_paths_from_images(label_folder) self.size = size self.transform = Compose( [Resize(size), ConvertDataType(), Normalize(0,1) ] ) def preprocess(self, data, label): h,w,c=data.shape h_gt, w_gt=label.shape assert h==h_gt, "error" assert w==w_gt, "error" data, label=self.transform(data, label) label=label[:,:,np.newaxis] return data, label def __getitem__(self,index): Img_path, Label_path = None, None Img_path = self.path_Img[index] Label_path = self.path_Label[index] data = cv2.imread(Img_path , cv2.IMREAD_COLOR) data = cv2.cvtColor(data, cv2.COLOR_BGR2RGB) label = cv2.imread(Label_path, cv2.IMREAD_GRAYSCALE) data,label = self.preprocess(data, label) return {'Image': data, 'Label': label, 'Path':Img_path} def __len__(self): return len(self.path_Img)登录后复制 In [8]

# 数据集类的测试,看是否能正常工作%matplotlib inlineimport matplotlib.pyplot as plt paddle.device.set_device("cpu")with paddle.no_grad(): dataset = BasicDataset("work/dataset/frames", "work/dataset/masks", 256) dataloader = DataLoader(dataset, batch_size = 1, shuffle = True, num_workers = 0) for index, traindata in enumerate(dataloader): image = traindata["Image"] image = np.asarray(image)[0] label = traindata["Label"] label = np.asarray(label)[0] print(image.shape, label.shape) plt.subplot(1,2,1), plt.title('frames') plt.imshow(image), plt.axis('off') plt.subplot(1,2,2), plt.title('label') plt.imshow(label.squeeze()), plt.axis('off') plt.show() print(50*'*') if index == 5: break登录后复制

(256, 256, 3) (256, 256, 1)登录后复制

<Figure size 432x288 with 2 Axes>登录后复制登录后复制登录后复制登录后复制登录后复制登录后复制

************************************************** (256, 256, 3) (256, 256, 1)登录后复制登录后复制登录后复制登录后复制登录后复制

<Figure size 432x288 with 2 Axes>登录后复制登录后复制登录后复制登录后复制登录后复制登录后复制

************************************************** (256, 256, 3) (256, 256, 1)登录后复制登录后复制登录后复制登录后复制登录后复制

<Figure size 432x288 with 2 Axes>登录后复制登录后复制登录后复制登录后复制登录后复制登录后复制

************************************************** (256, 256, 3) (256, 256, 1)登录后复制登录后复制登录后复制登录后复制登录后复制

<Figure size 432x288 with 2 Axes>登录后复制登录后复制登录后复制登录后复制登录后复制登录后复制

************************************************** (256, 256, 3) (256, 256, 1)登录后复制登录后复制登录后复制登录后复制登录后复制

<Figure size 432x288 with 2 Axes>登录后复制登录后复制登录后复制登录后复制登录后复制登录后复制

************************************************** (256, 256, 3) (256, 256, 1)登录后复制登录后复制登录后复制登录后复制登录后复制

<Figure size 432x288 with 2 Axes>登录后复制登录后复制登录后复制登录后复制登录后复制登录后复制

**************************************************登录后复制

三、U-Net模型组网

U-Net 是一个由 U 型网络结构组成的模型,它可以分为两个主要阶段:图像首先通过编码器进行下采样,生成高级的语义特征图;然后通过解码器进行上采样,将特征图恢复到原始图片的分辨率。为了进一步减少卷积操作中的训练参数并提升性能,还需要定义 SeparableConv 类。完成的 UNet 组网可以分为三个部分:首先需要定义编码器和解码器;接着将这两个部件结合在一起,形成完整的 U-Net 模型。这个模型被放入名为 Class的文件夹下的 unet.py 文件中进行保存。

1.定义SeparableConv2d类

整个过程是将Filter_Size乘以Filter_Size乘以Num_Filters的Conv操作分解为两个子Conv。首先对输入数据的每个通道应用一个Size乘Size乘以卷积核进行计算,输出通道数与输入相同;接着使用Size乘Size乘以Num_Filters的卷积核处理剩余部分。

import paddleimport paddle.nn as nnfrom paddle.nn import functional as Fimport numpy as npclass SeparableConv2D(paddle.nn.Layer): def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=None, weight_attr=None, bias_attr=None, data_format="NCHW"): super(SeparableConv2D, self).__init__() self._padding = padding self._stride = stride self._dilation = dilation self._in_channels = in_channels self._data_format = data_format # 第一次卷积参数,没有偏置参数 filter_shape = [in_channels, 1] + self.convert_to_list(kernel_size, 2, 'kernel_size') self.weight_conv = self.create_parameter(shape=filter_shape, attr=weight_attr) # 第二次卷积参数 filter_shape = [out_channels, in_channels] + self.convert_to_list(1, 2, 'kernel_size') self.weight_pointwise = self.create_parameter(shape=filter_shape, attr=weight_attr) self.bias_pointwise = self.create_parameter(shape=[out_channels], attr=bias_attr, is_bias=True) def convert_to_list(self, value, n, name, dtype=np.int): if isinstance(value, dtype): return [value, ] * n else: try: value_list = list(value) except TypeError: raise ValueError("The " + name + "'s type must be list or tuple. Received: " + str( value)) if len(value_list) != n: raise ValueError("The " + name + "'s length must be " + str(n) + ". Received: " + str(value)) for single_value in value_list: try: dtype(single_value) except (ValueError, TypeError): raise ValueError( "The " + name + "'s type must be a list or tuple of " + str( n) + " " + str(dtype) + " . Received: " + str( value) + " " "including element " + str(single_value) + " of type" + " " + str(type(single_value))) return value_list def forward(self, inputs): conv_out = F.conv2d(inputs, self.weight_conv, padding=self._padding, stride=self._stride, dilation=self._dilation, groups=self._in_channels, data_format=self._data_format) out = F.conv2d(conv_out, self.weight_pointwise, bias=self.bias_pointwise, padding=0, stride=1, dilation=1, groups=1, data_format=self._data_format) print('out:{}'.format(out.shape)) return out登录后复制

2.定义Encoder编码器

将网络结构中的Encoder下采样过程进行了一个Layer封装,方便后续调用,减少代码编写 下采样是有一个模型逐渐向下画曲线的一个过程,这个过程中是不断的重复一个单元结构将通道数不断增加,形状不断缩小,并且引入残差网络结构 将这些都抽象出来统一封装 In [6]

class Encoder(paddle.nn.Layer): def __init__(self, in_channels, out_channels): super(Encoder, self).__init__() self.relus = paddle.nn.LayerList( [paddle.nn.ReLU() for i in range(2)]) self.separable_conv_01 = SeparableConv2D(in_channels, out_channels, kernel_size=3, padding='same') self.bns = paddle.nn.LayerList( [paddle.nn.BatchNorm2D(out_channels) for i in range(2)]) self.separable_conv_02 = SeparableConv2D(out_channels, out_channels, kernel_size=3, padding='same') self.pool = paddle.nn.MaxPool2D(kernel_size=3, stride=2, padding=1) self.residual_conv = paddle.nn.Conv2D(in_channels, out_channels, kernel_size=1, stride=2, padding='same') def forward(self, inputs): previous_block_activation = inputs y = self.relus[0](inputs) y = self.separable_conv_01(y) y = self.bns[0](y) y = self.relus[1](y) y = self.separable_conv_02(y) y = self.bns[1](y) y = self.pool(y) residual = self.residual_conv(previous_block_activation) y = paddle.add(y, residual) return y登录后复制

3.定义Decoder编码器

在经过通道数达到最大得到高级语义特征图后,网络开始进行解码操作。这一过程涉及到上采样,即通道数逐渐减小,对应着图像尺寸的逐步增加,直至最终恢复到原始大小。这个过程中采用了重复相同结构残差网络的方法来减少代码编写工作,并将其定义为一个层纳入模型组网中使用。

class Decoder(paddle.nn.Layer): def __init__(self, in_channels, out_channels): super(Decoder, self).__init__() self.relus = paddle.nn.LayerList( [paddle.nn.ReLU() for i in range(2)]) self.conv_transpose_01 = paddle.nn.Conv2DTranspose(in_channels, out_channels, kernel_size=3, padding=1) self.conv_transpose_02 = paddle.nn.Conv2DTranspose(out_channels, out_channels, kernel_size=3, padding=1) self.bns = paddle.nn.LayerList( [paddle.nn.BatchNorm2D(out_channels) for i in range(2)] ) self.upsamples = paddle.nn.LayerList( [paddle.nn.Upsample(scale_factor=2.0) for i in range(2)] ) self.residual_conv = paddle.nn.Conv2D(in_channels, out_channels, kernel_size=1, padding='same') def forward(self, inputs): previous_block_activation = inputs y = self.relus[0](inputs) y = self.conv_transpose_01(y) y = self.bns[0](y) y = self.relus[1](y) y = self.conv_transpose_02(y) y = self.bns[1](y) y = self.upsamples[0](y) residual = self.upsamples[1](previous_block_activation) residual = self.residual_conv(residual) y = paddle.add(y, residual) return y登录后复制

4.U-Net组网

按照U型网络结构格式进行整体的网络结构搭建,三次下采样,四次上采样 In [9]

在这个UNet模型中,我们定义了一个包含通道的卷积层,并使用了上下文、批归一化和ReLU激活函数。接着,通过循环定义了六个编码器和解码器,使得模型可以处理不同大小的数据并逐层进行信息传递。最后,输出一层卷积层来提取图像特征。

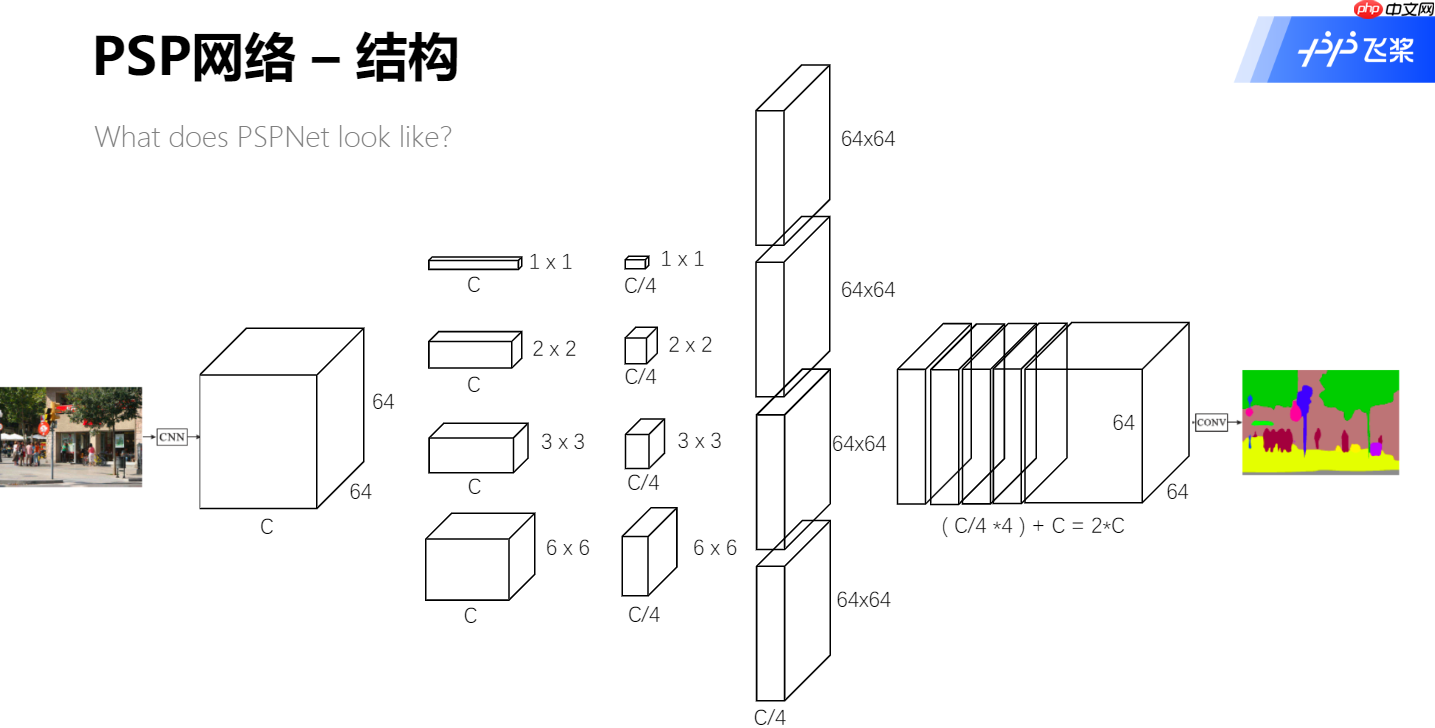

四、PSPNet网络组网

PSPNet的深入解析与实践指南# 网络结构介绍 首先,让我们来回顾一下PSPNet的基本框架。该模型主要由三个部分组成:backbone、PSPModule和classifier模块。在图中,我们可以看到的是网络的backbone部分,它采用了ResNet_vdResNet_vd作为基本结构。为了方便加载预训练模型,教程中使用了ResNet而不是ResNet_vd。# CNN模块详解 PSPNet的核心模块是CNN(卷积神经网络)模块,即backbone部分。它通过一系列的卷积和池化操作生成特征图,为后续处理提供基础信息。接下来进入的PSPModule部分,它是专门用来提取目标细节的组件。它由四个并行通道组成,每个通道都接收backbone模块产生的特征图,并对其分别进行处理。为了适应不同尺度的目标检测需求,这些特征图会通过adaptive_pool操作改变其尺寸,然后经过卷积和上采样等步骤,最终将输出的特征图与backbone部分生成的特征图相合并。# Pseudo-Original Content 在classifier模块中,如图所示,实际上是通过多个卷积层来对每一个像素进行分类处理。这个模块负责将分类结果呈现给下游网络或用户提供最终的识别和检测结果。 组网过程详解 设计PSPNet时,首先需要定义整个网络结构,然后才开始具体实现PSPModule部分。在Class件夹下的pspnet.py文件中,你可以找到具体的代码实现细节。以上内容便是关于PSPNet模型的全面解读和使用指南。通过这一系列的处理流程,我们可以得到一个高效且精确的目标检测系统。

1.PSPNet组网

In [1]

import paddle import paddle.nn as nnimport paddle.nn.functional as Ffrom paddle.vision.models import resnet50, resnet101class PSPNet(nn.Layer): def __init__(self, num_classes=13, backbone_name = "resnet50"): super(PSPNet, self).__init__() if backbone_name == "resnet50": backbone = resnet50(pretrained=True) if backbone_name == "resnet101": backbone = resnet101(pretrained=True) #self.layer0 = nn.Sequential(*[backbone.conv1, backbone.bn1, backbone.relu, backbone.maxpool]) self.layer0 = nn.Sequential(*[backbone.conv1, backbone.maxpool]) self.layer1 = backbone.layer1 self.layer2 = backbone.layer2 self.layer3 = backbone.layer3 self.layer4 = backbone.layer4 num_channels = 2048 self.pspmodule = PSPModule(num_channels, [1, 2, 3, 6]) num_channels *= 2 self.classifier = nn.Sequential(*[ nn.Conv2D(num_channels, 512, 3, padding = 1), nn.BatchNorm2D(512), nn.ReLU(), nn.Dropout2D(0.1), nn.Conv2D(512, num_classes, 1) ]) def forward(self, inputs): x = self.layer0(inputs) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) x = self.pspmodule(x) x = self.classifier(x) out = F.interpolate( x, paddle.shape(inputs)[2:], mode='bilinear', align_corners=True) return out登录后复制

2.PSPModule定义

In [2]

class PSPModule(nn.Layer): def __init__(self, num_channels, bin_size_list): super(PSPModule, self).__init__() self.bin_size_list = bin_size_list num_filters = num_channels // len(bin_size_list) self.features = [] for i in range(len(bin_size_list)): self.features.append(nn.Sequential(*[ nn.Conv2D(num_channels, num_filters, 1), nn.BatchNorm2D(num_filters), nn.ReLU() ])) def forward(self, inputs): out = [inputs] for idx, f in enumerate(self.features): pool = paddle.nn.AdaptiveAvgPool2D(self.bin_size_list[idx]) x = pool(inputs) x = f(x) x = F.interpolate(x, paddle.shape(inputs)[2:], mode="bilinear", align_corners=True) out.append(x) out = paddle.concat(out, axis=1) return out登录后复制

五、模型训练

数据处理和模型组网部分概述此前我们讨论了数据处理和模型组网的部分。这部分涉及的问题包括如何准备和加载数据、网络架构的设计及构建、以及参数初始化等。 训练模型部分的详细内容为了训练高质量的模型,需要考虑以下几个关键因素: 损失函数:U-Net 和 PSPNet 都是通过逐像素的分类来处理特征图。因此,其使用的损失函数为计算每一个像素的 softmax 交叉熵损失。具体实现位于 `work/Classbasic_seg_loss.py` 文件中。 优化算法:采用 SGD(随机梯度下降)作为主要的优化算法,以适应不同大小的数据集和复杂模型的需求。 资源配置:由于 studio 只能使用单张 GPU 进行训练,并不考虑多卡方案。因此,在训练过程中,资源分配尤为重要。通常涉及网络结构、数据采样策略等方面的设计。 断点恢复训练:为防止训练过程中的过拟合或欠拟合问题,模型需要保存其参数和优化器状态以便在重新开始训练时使用。具体实现细节位于 `work/Classutils.py` 文件中。 效果评估部分的定义为了确保模型的有效性和稳定性,效果评价是一个不可或缺的部分。以下是用于分割效果评估的具体步骤: 准确率:用来衡量预测结果与真实标签的一致程度。 交并比(IoU):计算模型在不同类别之间的匹配程度。这些指标通常在 `work/Classutils.py` 文件中被定义和使用,确保训练后的模型能够有效处理各类数据。 训练代码实现为了启动模型的训练过程,我们提供以下示例:```python # 执行以下命令开始训练: # python work/Classtrain.py ```通过这些步骤,我们可以有效地设计、评估和优化模型来解决各种图像分割问题。

# 训练模型, 记得修改train.py中的use_gpu参数为True!python work/Class3/train.py登录后复制

六、预测结果

In [23]

import paddlefrom paddle.io import DataLoaderimport work.Class3.utils as utilsimport cv2import numpy as npimport osfrom work.Class3.unet import UNetfrom work.Class3.pspnet import PSPNet登录后复制 In [17]

# 加载模型函数def loadModel(net, model_path): if net == 'unet': model = UNet(13) if net == 'pspnet': model = PSPNet() params_dict = paddle.load(model_path) model.set_state_dict(params_dict) return model登录后复制 In [21]

# 验证函数,修改参数来选择要验证的模型def Val(net = 'unet'): image_folder = r"work/dataset/val_frames" label_folder = r"work/dataset/val_masks" model_path = r"work/Class3/output/{}_epoch200.pdparams".format(net) output_dir = r"work/Class3/val_result" if not os.path.isdir(output_dir): os.mkdir(output_dir) model = loadModel(net, model_path) model.eval() dataset = Basic_ValDataset(image_folder, label_folder, 256) # size为256 dataloader = DataLoader(dataset, batch_size = 1, shuffle = False, num_workers = 1) result_dict = {} val_acc_list = [] val_iou_list = [] for index, data in enumerate(dataloader): image = data["Image"] label = data["Label"] imgPath = data["Path"][0] image = paddle.transpose(image, [0, 3, 1, 2]) pred = model(image) label_pred = np.argmax(pred.numpy(), 1) # 计算acc和iou指标 label_true = label.numpy() acc, acc_cls, mean_iu, fwavacc = utils.label_accuracy_score(label_true, label_pred, n_class=13) filename = imgPath.split('/')[-1] print('{}, acc:{}, iou:{}, acc_cls{}'.format(filename, acc, mean_iu, acc_cls)) val_acc_list.append(acc) val_iou_list.append(mean_iu) result = label_pred[0] cv2.imwrite(os.path.join(output_dir, filename), result) val_acc, val_iou = np.mean(val_acc_list), np.mean(val_iou_list) print('val_acc:{}, val_iou{}'.format(val_acc, val_iou))登录后复制 In [30]

Val(net = 'unet') #验证U-Net登录后复制

- png, acc:0.9532470703125, iou:0.21740302272507017, acc_cls0.24366524880119939 000000001.png, acc:0.9403533935546875, iou:0.21750944976291642, acc_cls0.2397760950676889 000000002.png, acc:0.8805084228515625, iou:0.20677225948304948, acc_cls0.22165470417461045 000000003.png, acc:0.910186767578125, iou:0.5374669697784406, acc_cls0.6002255939000143 000000004.png, acc:0.9135894775390625, iou:0.49426367831723594, acc_cls0.5440891190528987 000000005.png, acc:0.9084930419921875, iou:0.5620866142956834, acc_cls0.6502875734164735 000000006.png, acc:0.9343414306640625, iou:0.6045398696214368, acc_cls0.6824411153037097 000000007.png, acc:0.86566162109375, iou:0.13596347532565847, acc_cls0.15904775159141893 000000008.png, acc:0.92608642578125, iou:0.6066313636385289, acc_cls0.686377579583622 000000009.png, acc:0.9074554443359375, iou:0.14527489862487447, acc_cls0.19636295563014194 000000010.png, acc:0.978912353515625, iou:0.41013007889626, acc_cls0.5309096608495731 000000011.png, acc:0.8917388916015625, iou:0.12945535175493833, acc_cls0.15509463825018682 000000012.png, acc:0.88568115234375, iou:0.12710576977334995, acc_cls0.13982065622130674 000000013.png, acc:0.8527374267578125, iou:0.12052946145058839, acc_cls0.15498666764101443 000000014.png, acc:0.855865478515625, iou:0.11997121417720466, acc_cls0.1300705914081646 000000015.png, acc:0.8303680419921875, iou:0.11224693229386994, acc_cls0.15063578243606204 000000016.png, acc:0.81634521484375, iou:0.12238405158883152, acc_cls0.1552799892215321 000000017.png, acc:0.8724517822265625, iou:0.14832212341894052, acc_cls0.15794770487704352 000000018.png, acc:0.973236083984375, iou:0.15880628364464697, acc_cls0.16694603198365687 000000019.png, acc:0.9571380615234375, iou:0.15820230152015433, acc_cls0.16694095946803228 000000020.png, acc:0.9492950439453125, iou:0.15843530267671513, acc_cls0.17159408155183756 000000021.png, acc:0.9835205078125, iou:0.5169552867224676, acc_cls0.5293089391662275 000000022.png, acc:0.93670654296875, iou:0.14081470138925065, acc_cls0.14986690818069334 000000023.png, acc:0.9805908203125, iou:0.15547314332955006, acc_cls0.16389840413777204 000000024.png, acc:0.973480224609375, iou:0.15124500798277063, acc_cls0.21221830238297923 000000025.png, acc:0.8671112060546875, iou:0.1320808783138103, acc_cls0.18834871622115743 000000026.png, acc:0.8807220458984375, iou:0.12551283537045949, acc_cls0.160127612253545 000000027.png, acc:0.8549346923828125, iou:0.1256957684753734, acc_cls0.1947882072082881 000000028.png, acc:0.7188873291015625, iou:0.09553027193514095, acc_cls0.13162457606165895 000000029.png, acc:0.6623687744140625, iou:0.08602672874865583, acc_cls0.1448762024148364 000000030.png, acc:0.6565704345703125, iou:0.07762716768192297, acc_cls0.14779997114294124 000000031.png, acc:0.668609619140625, iou:0.08023237592062181, acc_cls0.15122971232487634 000000032.png, acc:0.982666015625, iou:0.531055672795304, acc_cls0.551112678117131 000000033.png, acc:0.6807403564453125, iou:0.08217231354625484, acc_cls0.12642576184992113 000000034.png, acc:0.7405242919921875, iou:0.09975355253896562, acc_cls0.14102723936071107 000000035.png, acc:0.7180633544921875, iou:0.08998982428014987, acc_cls0.12264189412001483 000000036.png, acc:0.7132110595703125, iou:0.09494116642949992, acc_cls0.15935831094464523 000000037.png, acc:0.74932861328125, iou:0.10875879497291979, acc_cls0.15855171662196246 000000038.png, acc:0.8556671142578125, iou:0.12793083621254434, acc_cls0.15536618070094121 000000039.png, acc:0.8833160400390625, iou:0.12986589591132633, acc_cls0.15274859248800776 000000040.png, acc:0.8965606689453125, iou:0.13013244391447568, acc_cls0.14474484569354112 000000041.png, acc:0.9409332275390625, iou:0.6847726608359286, acc_cls0.7734320143470286 000000042.png, acc:0.94476318359375, iou:0.13839467135688366, acc_cls0.14964636561620268 000000043.png, acc:0.9872589111328125, iou:0.533262521084918, acc_cls0.5504109781551652 000000044.png, acc:0.88800048828125, iou:0.1229156693978053, acc_cls0.1447694124446469 000000045.png, acc:0.881378173828125, iou:0.12194908345030431, acc_cls0.14572180611905405 000000046.png, acc:0.851593017578125, iou:0.11931384553390069, acc_cls0.13810932798358022 000000047.png, acc:0.8807220458984375, iou:0.12388368769572063, acc_cls0.1413720889511982 000000048.png, acc:0.8756103515625, iou:0.12691871866741045, acc_cls0.17894228937721984 000000049.png, acc:0.92852783203125, iou:0.1372728846966373, acc_cls0.1531958262879321 000000050.png, acc:0.927459716796875, iou:0.13396655339308303, acc_cls0.14496793246471645 val_acc:0.8728141036688113, val_iou0.21211657716377352登录后复制 In [25]

Val(net='pspnet') #验证PSPNet登录后复制

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:641: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.")登录后复制

- png, acc:0.9158935546875, iou:0.1577242942255457, acc_cls0.17173641185720198 000000001.png, acc:0.910797119140625, iou:0.16134327173108523, acc_cls0.1762481738122499 000000002.png, acc:0.890045166015625, iou:0.15437460034040562, acc_cls0.17223887049440076 000000003.png, acc:0.8770599365234375, iou:0.32909551160641853, acc_cls0.3755967387939404 000000004.png, acc:0.896759033203125, iou:0.4255025049622586, acc_cls0.47846308530983944 000000005.png, acc:0.874267578125, iou:0.4274390621432923, acc_cls0.4899027661840728 000000006.png, acc:0.8851470947265625, iou:0.43181422085059556, acc_cls0.47451645143452004 000000007.png, acc:0.8247833251953125, iou:0.10052916219690879, acc_cls0.1383570399712419 000000008.png, acc:0.8719635009765625, iou:0.38244574435993944, acc_cls0.43329535485474685 000000009.png, acc:0.8692779541015625, iou:0.13464066456750332, acc_cls0.18448936562578214 000000010.png, acc:0.9468536376953125, iou:0.44863223039889155, acc_cls0.46856662893925427 000000011.png, acc:0.941162109375, iou:0.13867667780473572, acc_cls0.18118463243502247 000000012.png, acc:0.945068359375, iou:0.1389176838363624, acc_cls0.15043614809102962 000000013.png, acc:0.9033355712890625, iou:0.12846794742347878, acc_cls0.14400104197131805 000000014.png, acc:0.8373565673828125, iou:0.11054204416461214, acc_cls0.13875799125240074 000000015.png, acc:0.86090087890625, iou:0.1264827994115744, acc_cls0.16435776274153532 000000016.png, acc:0.7948760986328125, iou:0.11874856076666136, acc_cls0.15379384017006992 000000017.png, acc:0.8228759765625, iou:0.12823383572579636, acc_cls0.1570891982493681 000000018.png, acc:0.9229583740234375, iou:0.1302237343290557, acc_cls0.13985282348585362 000000019.png, acc:0.914764404296875, iou:0.12877152670350586, acc_cls0.14030697926333272 000000020.png, acc:0.906524658203125, iou:0.12558250297736512, acc_cls0.13805377313078535 000000021.png, acc:0.9603271484375, iou:0.4624199768708489, acc_cls0.47837019179957135 000000022.png, acc:0.9090728759765625, iou:0.12669000770141225, acc_cls0.13971059069788844 000000023.png, acc:0.9561920166015625, iou:0.1401867698842562, acc_cls0.14819146018309393 000000024.png, acc:0.958831787109375, iou:0.14103439018664546, acc_cls0.1490837315909282 000000025.png, acc:0.8876800537109375, iou:0.12239545261262934, acc_cls0.13539270176077994 000000026.png, acc:0.897247314453125, iou:0.12537335704194594, acc_cls0.13749971322365556 000000027.png, acc:0.905670166015625, iou:0.1277774355920293, acc_cls0.13915315931355438 000000028.png, acc:0.8493804931640625, iou:0.1123330103897112, acc_cls0.12825470143791537 000000029.png, acc:0.74676513671875, iou:0.08918434684919538, acc_cls0.11052698832946779 000000030.png, acc:0.662445068359375, iou:0.07431417013700324, acc_cls0.13493026187817742 000000031.png, acc:0.675506591796875, iou:0.07255593791925741, acc_cls0.1490838030416825 000000032.png, acc:0.9351348876953125, iou:0.4372444574056901, acc_cls0.4611648985543674 000000033.png, acc:0.723907470703125, iou:0.08647248810649447, acc_cls0.1537256973411532 000000034.png, acc:0.6313934326171875, iou:0.06857098073634199, acc_cls0.1488324367913469 000000035.png, acc:0.804412841796875, iou:0.10820015109697752, acc_cls0.13811802302293585 000000036.png, acc:0.808563232421875, iou:0.10711285792361261, acc_cls0.12773659704192822 000000037.png, acc:0.73858642578125, iou:0.09494626712583215, acc_cls0.15144985295885574 000000038.png, acc:0.8296051025390625, iou:0.10950276111431888, acc_cls0.13514122161203976 000000039.png, acc:0.8893280029296875, iou:0.12520038793252025, acc_cls0.15648624414517176 000000040.png, acc:0.8923492431640625, iou:0.12395896040329932, acc_cls0.14618784019861522 000000041.png, acc:0.8879852294921875, iou:0.3993106317304327, acc_cls0.4364105449925553 000000042.png, acc:0.9210662841796875, iou:0.12852261270994678, acc_cls0.143167733626099 000000043.png, acc:0.9283599853515625, iou:0.4298842882667755, acc_cls0.4555886592291649 000000044.png, acc:0.9065093994140625, iou:0.12527710447115725, acc_cls0.14299767782824516 000000045.png, acc:0.8910980224609375, iou:0.12194565425367523, acc_cls0.14454145715166625 000000046.png, acc:0.881591796875, iou:0.12085055326063152, acc_cls0.140775084335062 000000047.png, acc:0.8845977783203125, iou:0.12193075116381968, acc_cls0.13955108498475677 000000048.png, acc:0.8610992431640625, iou:0.11238279829752305, acc_cls0.12585970329906584 000000049.png, acc:0.8972930908203125, iou:0.12274889202392533, acc_cls0.13700648812518762 000000050.png, acc:0.8695068359375, iou:0.11524267939015634, acc_cls0.12829112730315914 val_acc:0.8667485854204964, val_iou0.17807370025733443登录后复制

七、预测结果以及可视化

U-Net训练200个epoch的结果,acc: 87.28%,IOU:21.21% PSPNet训练200个epoch的结果,acc:86.09% , IOU:17.60%U-Net与PSPNet可视化对比 In [28]

# 预测结果转为color图像import jsonimport numpy as npfrom PIL import Image import cv2import os labels = ['Background', 'Asphalt', 'Paved', 'Unpaved', 'Markings', 'Speed-Bump', 'Cats-Eye', 'Storm-Drain', 'Patch', 'Water-Puddle', 'Pothole', 'Cracks']# 将标签影像转为color图像def mask2color(mask, labels): jsonfile = json.load(open(r"work/dataset/mask2color.json")) h, w = mask.shape[:2] color = np.zeros([h, w, 3]) for index in range(len(labels)): if index>=8: mask_index = index+1 # mask标签需要改变 else: mask_index = index if mask_index in mask: color[np.where(mask == mask_index)] = np.asarray(jsonfile[labels[index]]) else: continue return color# 将转换好的color图保存在Class2文件夹下的val_color_result文件夹def save_color(net): save_dir = r"work/Class3/{}_color_result".format(net) if not os.path.isdir(save_dir): os.mkdir(save_dir) mask_dir = r"work/Class3/val_result" mask_names = [f for f in os.listdir(mask_dir) if f.endswith('.png')] for maskname in mask_names: mask = cv2.imread(os.path.join(mask_dir, maskname), -1) color = mask2color(mask, labels) result = Image.fromarray(np.uint8(color)) result.save(os.path.join(save_dir, maskname))登录后复制 In []

save_color('pspnet') #保存pspnet的预测结果转色彩图像登录后复制 In [31]

save_color('unet') #保存unet的预测结果转色彩图像登录后复制 In [33]

import matplotlib.pyplot as pltfrom PIL import Imageimport os# 展示所用图片在example文件夹中newsize = (256, 256) gt_color1 = Image.open(r"work/dataset/val_colors/000000000GT.png").resize(newsize) frames1 = Image.open(r"work/dataset/val_frames/000000000.png").resize(newsize) unet1 = Image.open(r"work/Class3/unet_color_result/000000000.png") pspnet1 = Image.open(r"work/Class3/pspnet_color_result/000000000.png") gt_color2 = Image.open(r"work/dataset/val_colors/000000032GT.png").resize(newsize) frames2 = Image.open(r"work/dataset/val_frames/000000032.png").resize(newsize) unet2 = Image.open(r"work/Class3/unet_color_result/000000032.png") pspnet2 = Image.open(r"work/Class3/pspnet_color_result/000000032.png") gt_color3 = Image.open(r"work/dataset/val_colors/000000041GT.png").resize(newsize) frames3 = Image.open(r"work/dataset/val_frames/000000041.png").resize(newsize) unet3 = Image.open(r"work/Class3/unet_color_result/000000041.png") pspnet3 = Image.open(r"work/Class3/pspnet_color_result/000000041.png") plt.figure(figsize=(20,24))#设置窗口大小plt.subplot(3,4,1), plt.title('frames') plt.imshow(frames1), plt.axis('off') plt.subplot(3,4,2), plt.title('GT') plt.imshow(gt_color1), plt.axis('off') plt.subplot(3,4,3), plt.title('unet') plt.imshow(unet1), plt.axis('off') plt.subplot(3,4,4), plt.title('pspnet') plt.imshow(pspnet1), plt.axis('off') plt.subplot(3,4,5), plt.title('frames') plt.imshow(frames2), plt.axis('off') plt.subplot(3,4,6), plt.title('GT') plt.imshow(gt_color2), plt.axis('off') plt.subplot(3,4,7), plt.title('unet') plt.imshow(unet2), plt.axis('off') plt.subplot(3,4,8), plt.title('pspnet') plt.imshow(pspnet2), plt.axis('off') plt.subplot(3,4,9), plt.title('frames') plt.imshow(frames3), plt.axis('off') plt.subplot(3,4,10), plt.title('GT') plt.imshow(gt_color3), plt.axis('off') plt.subplot(3,4,11), plt.title('unet') plt.imshow(unet3), plt.axis('off') plt.subplot(3,4,12), plt.title('pspnet') plt.imshow(pspnet3), plt.axis('off') plt.show()登录后复制

<Figure size 1440x1728 with 12 Axes>登录后复制

代码解释 In []

<br/>登录后复制

以上就是基于飞桨框架2.1实现U-Net与PSPNet图像分割算法对路面分割的详细内容,更多请关注其它相关文章!