【AI达人特训营】PaddleNLP实现聊天问答匹配

时间:2025-08-13

【AI达人特训营】PaddleNLP实现聊天问答匹配

【飞桨领航团】AI达人特训营:PaddleNLP实现聊天问答匹配

1. 项目背景

本项目是ai达人特训营的选题,目测该选题来自这个比赛:房产行业聊天问答匹配

背景:贝壳找房通过技术驱动提供高品质居住服务,“有尊严的服务者、更美好的居住”,是其使命。在这个过程中,客户与房产经纪人之间在贝壳APP的即时通讯工具上进行频繁互动,以满足他们对居住环境的要求。

任务:

结果:

说明:比赛已过去一段时间,目前只能获取长期公开赛的成绩和排名。虽然竞争不大,但为理解文本匹配方法提供了很好的练习机会。

2. 数据集

2.1 数据说明

一共有三份数据:训练集、测试集和提交示例。

训练集包含客户问题文件和经纪人回复两个文件,涉及6000段对话(有标签答案)。

测试集包含客户问题文件和经纪人回复两个文件,涉及14000段对话(无标签答案)。

2.2 数据示例

解压训练集和测试集 In [1]

!unzip data/data144231/train.zip -d ./data && unzip data/data144231/test.zip -d ./data登录后复制

Archive: data/data144231/train.zip creating: ./data/train/ inflating: ./data/train/train.query.tsv inflating: ./data/train/train.reply.tsv Archive: data/data144231/test.zip creating: ./data/test/ inflating: ./data/test/test.query.tsv inflating: ./data/test/test.reply.tsv登录后复制

查看数据示例 In [2]

import pandas as pd train_query = pd.read_csv("data/train/train.query.tsv", sep='\t', header=None) train_query.columns = ['query_id', 'sentence']# query 文件有 2 列,分别是问题 Id 和客户问题,同一对话 Id 只有一个问题,已脱敏print(train_query.head()) train_reply = pd.read_csv("data/train/train.reply.tsv", sep='\t', header=None) train_reply.columns = ['query_id', 'reply_id', 'sentence', 'label']# reply 文件有 4 列,分别是:# 对话id,对应客户问题文件中的对话 id # 经纪人回复 Id,Id 对应真实回复顺序# 经纪人回复内容,已脱敏# 经纪人回复标签,1 表示此回复是针对客户问题的回答,0 相反print(train_reply.head())# 训练集文件结构与测试集相同,只不过 reply 文件没有回复标签test_query = pd.read_csv("data/test/test.query.tsv", sep='\t', header=None, encoding='gb18030') test_query.columns = ['query_id', 'sentence']print(test_query.head()) test_reply = pd.read_csv("data/test/test.reply.tsv", sep='\t', header=None, encoding='gb18030') test_reply.columns = ['query_id', 'reply_id', 'sentence']print(test_reply.head())登录后复制

新颖伪原创文章query_id sentence 小区地址:采荷一小是分校吗 是的吗? 毛坯房源?佣金和契税贵不贵? 这个位置靠近哪里? 套房价格还有优惠空间吗?query_id reply_id sentence label 杭州市采荷第一小学钱江苑校区,杭州市钱江新城实验学校。 1 是的 0 这是 0 因为公积金贷款额度不足 0 确实如此 uery_id sentence 小区名称:东区西区?何时发证书? 学校是哪一部分的? 需要看哪个部分? 楼层面积和户型大小 0 什么时候可以预约看房呢?query_id sentence 我们正在为您提供一套房源信息。 1 您看看我发的这些房源,都是金源花园的 0 这两套房子的价格很优惠。 0 好的房子一般都在顶层 0 看房的时间还没有确定,您稍等一下。 0

3. ERNIE 系列模型

原文: ERNIE: Enhanced Representation through Knowledge Integration ERNIE 2.0: A Continual Pre-training Framework for Language Understanding ERNIE 3.0: Large-scale Knowledge Enhanced Pre-training for Language Understanding and Generation

ERNIE 1.0

对原始的MLM任务进行了优化,加入了Entity-level和Phrase-level掩码技术,使模型能更好地掌握词汇短语的知识。此改进方法已成为后续中文字处理模型的标准配置。

Entity-level masking 和 Phrase-level masking

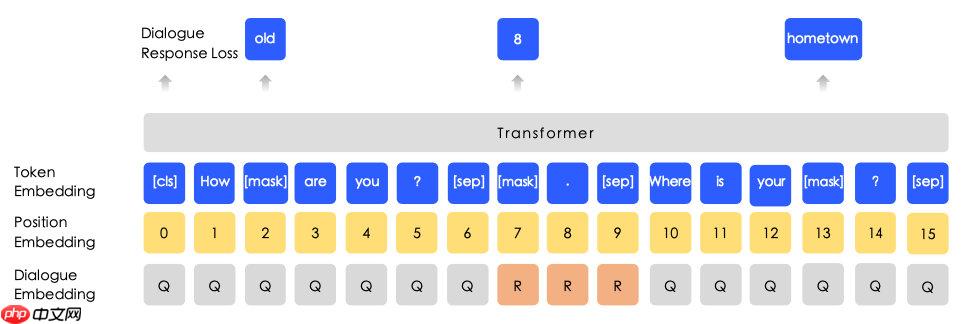

我们引入了Dialogue Language Model (DLM)来优化NSP任务,不仅在预测MASK TOKEN时判断多轮对话的真实性和完整性,还在多轮对话中准确捕捉信息流动。

Dialogue Language Model

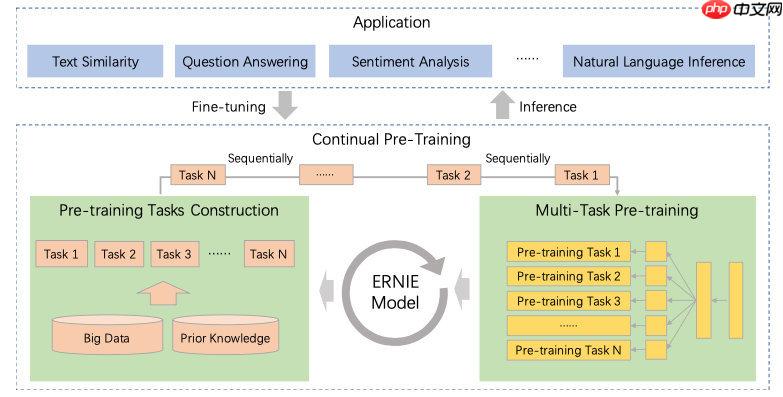

ERNIE 2.0

首先是框架上的闭环化,把各种下游任务持续加入模型中提升效果:

ERNIE 2.0

其次引入更多预训练任务,细节参见原论文。

ERNIE 3.0

- 0版本首先延续了之前有效的预训练任务。

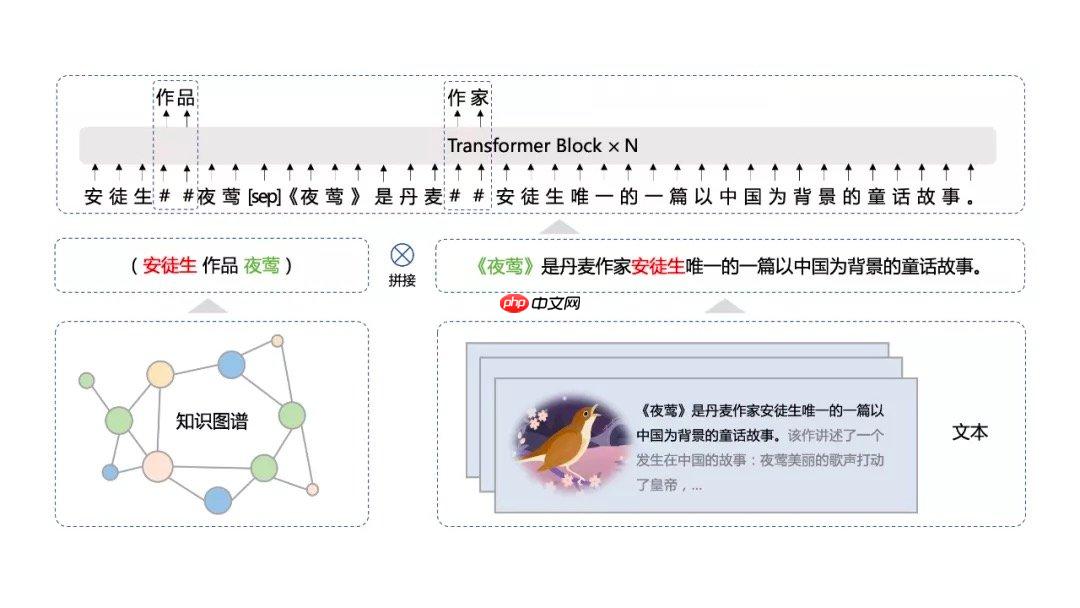

其次本提出了海量无监督文本与大规模知识图谱的平行预训练方法(Universal Knowledge-Text Prediction)。将万个知识图谱三元组与B大规模语料中的相关文本配对,并输入到预训练模型中进行联合掩码训练。这种方法为多模态学习提供了新的路径,有望在自然语言处理和信息检索等领域取得突破性进展。

Universal Knowledge-Text Prediction

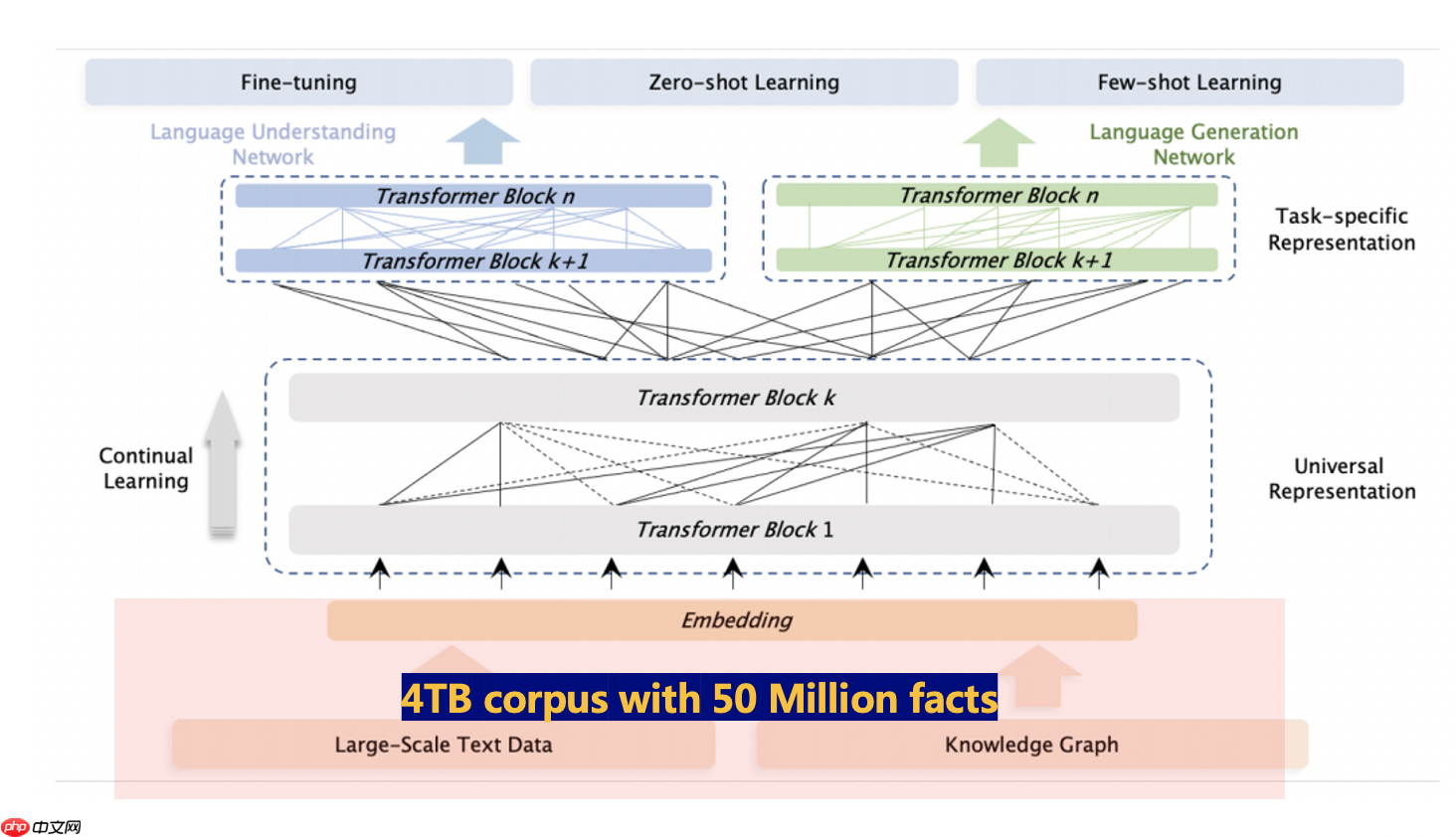

语料库4TB,我的天!!!

ERNIE 架由两层结构组成:第一层是通用语义表示网络,专注于捕捉基础和通用知识;第二层是任务特定的语义表示网络,它基于通用知识体系,专门针对特定的任务进行学习。在这一过程中,任务语义网络只关注相关类别内的预训练任务,而通用语义表示网络则覆盖所有预设任务的学习。

ERNIE 3.0模型框架

本项目使用 3.0 版本预训练模型。

4. 解题思路

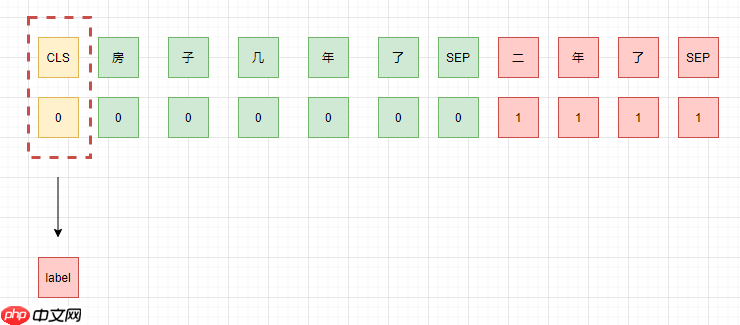

可以将问题与答案结合形成query-reply-pair,通过文本分类来区分属于提问还是回答类型。

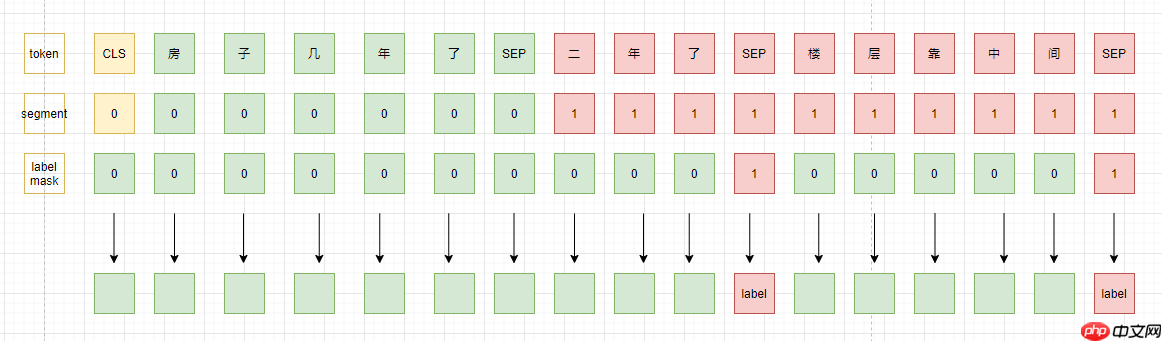

虽然对话看似不连贯,但实质上它们构成一个完整的对话序列,不同回答间的逻辑联系为理解提供了关键线索。因此,可尝试一种新方法:每个问题的所有回复应被整合到当前回应的末尾,并对每一项回复进行分析和验证。这种方法有助于更深入地挖掘信息,提升对话理解和数据处理的效果。

基于思路我们不仅能提取[CLS]对应的输出,还能预测每个回复中是否有与之匹配的答案。

本项目采用思路 1作为 baseline 。

5. Talk is cheap, show me the code

首先安装最新版本的 paddlenlp In []

!pip install paddlenlp --upgrade登录后复制

上述安装过程可能会报错,大概是parl包的版本依赖问题,对于本项目来说无伤大雅。

训练、测试代码都写在 query_reply_pair.py 文件中,有详细注释。

请注意,在主函数中调整训练参数列表。为了便于展示效果并减少输出信息过长的问题,结果在num_train_epochs=情况下生成。为获得更佳表现,请根据实际情况进行修改,并查看开头展示的项目成绩是使用num_train_epochs=结果。

运行脚本: In [4]

!python query_reply_pair.py登录后复制

[2022-06-22 10:04:10,523] [ INFO] - using `logging_steps` to initialize `eval_steps` to 100[2022-06-22 10:04:10,523] [ INFO] - ============================================================[2022-06-22 10:04:10,523] [ INFO] - Model Configuration Arguments [2022-06-22 10:04:10,523] [ INFO] - paddle commit id :590b4dbcdd989324089ce43c22ef151c746c92a3[2022-06-22 10:04:10,523] [ INFO] - export_model_dir :None[2022-06-22 10:04:10,523] [ INFO] - model_name_or_path :ernie-3.0-medium-zh[2022-06-22 10:04:10,524] [ INFO] - [2022-06-22 10:04:10,524] [ INFO] - ============================================================[2022-06-22 10:04:10,524] [ INFO] - Data Configuration Arguments [2022-06-22 10:04:10,524] [ INFO] - paddle commit id :590b4dbcdd989324089ce43c22ef151c746c92a3[2022-06-22 10:04:10,524] [ INFO] - max_seq_length :128[2022-06-22 10:04:10,524] [ INFO] - test_query_path :data/test/test.query.tsv[2022-06-22 10:04:10,524] [ INFO] - test_reply_path :data/test/test.reply.tsv[2022-06-22 10:04:10,524] [ INFO] - train_query_path :data/train/train.query.tsv[2022-06-22 10:04:10,524] [ INFO] - train_reply_path :data/train/train.reply.tsv[2022-06-22 10:04:10,524] [ INFO] - raw train dataset example: {'query': '东区西区?什么时候下证?', 'reply': '我在给你发套'}.[2022-06-22 10:04:11,911] [ INFO] - We are using <class 'paddlenlp.transformers.ernie.tokenizer.ErnieTokenizer'> to load 'ernie-3.0-medium-zh'.[2022-06-22 10:04:11,911] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/ernie-3.0-medium-zh/ernie_3.0_medium_zh_vocab.txt[2022-06-22 10:04:11,934] [ INFO] - We are using <class 'paddlenlp.transformers.ernie.modeling.ErnieForSequenceClassification'> to load 'ernie-3.0-medium-zh'.[2022-06-22 10:04:11,934] [ INFO] - Already cached /home/aistudio/.paddlenlp/models/ernie-3.0-medium-zh/ernie_3.0_medium_zh.pdparams W0622 10:04:11.936193 1093 gpu_context.cc:278] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 10.1 W0622 10:04:11.939395 1093 gpu_context.cc:306] device: 0, cuDNN Version: 7.6. feature train dataset example: {'input_ids': [1, 481, 1535, 7, 96, 10, 59, 225, 940, 2, 1852, 404, 99, 481, 1535, 131, 7, 96, 18, 958, 409, 2485, 225, 121, 4, 1852, 404, 99, 958, 409, 102, 257, 79, 412, 18, 225, 12043, 2], 'token_type_ids': [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], 'label': 1}.[2022-06-22 10:04:16,960] [ INFO] - ============================================================[2022-06-22 10:04:16,960] [ INFO] - Training Configuration Arguments [2022-06-22 10:04:16,960] [ INFO] - paddle commit id :590b4dbcdd989324089ce43c22ef151c746c92a3[2022-06-22 10:04:16,961] [ INFO] - _no_sync_in_gradient_accumulation:True[2022-06-22 10:04:16,961] [ INFO] - adam_beta1 :0.9[2022-06-22 10:04:16,961] [ INFO] - adam_beta2 :0.999[2022-06-22 10:04:16,961] [ INFO] - adam_epsilon :1e-08[2022-06-22 10:04:16,961] [ INFO] - current_device :gpu:0[2022-06-22 10:04:16,961] [ INFO] - dataloader_drop_last :False[2022-06-22 10:04:16,961] [ INFO] - dataloader_num_workers :4[2022-06-22 10:04:16,961] [ INFO] - device :gpu[2022-06-22 10:04:16,961] [ INFO] - disable_tqdm :False[2022-06-22 10:04:16,961] [ INFO] - do_eval :True[2022-06-22 10:04:16,961] [ INFO] - do_export :False[2022-06-22 10:04:16,961] [ INFO] - do_predict :True[2022-06-22 10:04:16,961] [ INFO] - do_train :True[2022-06-22 10:04:16,961] [ INFO] - eval_batch_size :128[2022-06-22 10:04:16,961] [ INFO] - eval_steps :100[2022-06-22 10:04:16,961] [ INFO] - evaluation_strategy :IntervalStrategy.STEPS[2022-06-22 10:04:16,961] [ INFO] - fp16 :False[2022-06-22 10:04:16,961] [ INFO] - fp16_opt_level :O1[2022-06-22 10:04:16,961] [ INFO] - gradient_accumulation_steps :1[2022-06-22 10:04:16,961] [ INFO] - greater_is_better :True[2022-06-22 10:04:16,962] [ INFO] - ignore_data_skip :False[2022-06-22 10:04:16,962] [ INFO] - label_names :None[2022-06-22 10:04:16,962] [ INFO] - learning_rate :5e-05[2022-06-22 10:04:16,962] [ INFO] - load_best_model_at_end :True[2022-06-22 10:04:16,962] [ INFO] - local_process_index :0[2022-06-22 10:04:16,962] [ INFO] - local_rank :-1[2022-06-22 10:04:16,962] [ INFO] - log_level :-1[2022-06-22 10:04:16,962] [ INFO] - log_level_replica :-1[2022-06-22 10:04:16,962] [ INFO] - log_on_each_node :True[2022-06-22 10:04:16,962] [ INFO] - logging_dir :work/query_reply_pair/runs/Jun22_10-04-10_jupyter-532817-4195533[2022-06-22 10:04:16,962] [ INFO] - logging_first_step :False[2022-06-22 10:04:16,962] [ INFO] - logging_steps :100[2022-06-22 10:04:16,962] [ INFO] - logging_strategy :IntervalStrategy.STEPS[2022-06-22 10:04:16,962] [ INFO] - lr_scheduler_type :SchedulerType.LINEAR[2022-06-22 10:04:16,962] [ INFO] - max_grad_norm :1.0[2022-06-22 10:04:16,962] [ INFO] - max_steps :-1[2022-06-22 10:04:16,962] [ INFO] - metric_for_best_model :accuracy[2022-06-22 10:04:16,962] [ INFO] - minimum_eval_times :None[2022-06-22 10:04:16,962] [ INFO] - no_cuda :False[2022-06-22 10:04:16,962] [ INFO] - num_train_epochs :0.5[2022-06-22 10:04:16,962] [ INFO] - optim :OptimizerNames.ADAMW[2022-06-22 10:04:16,962] [ INFO] - output_dir :work/query_reply_pair/test[2022-06-22 10:04:16,962] [ INFO] - overwrite_output_dir :False[2022-06-22 10:04:16,962] [ INFO] - past_index :-1[2022-06-22 10:04:16,962] [ INFO] - per_device_eval_batch_size :128[2022-06-22 10:04:16,962] [ INFO] - per_device_train_batch_size :128[2022-06-22 10:04:16,963] [ INFO] - prediction_loss_only :False[2022-06-22 10:04:16,963] [ INFO] - process_index :0[2022-06-22 10:04:16,963] [ INFO] - remove_unused_columns :True[2022-06-22 10:04:16,963] [ INFO] - report_to :['visualdl'][2022-06-22 10:04:16,963] [ INFO] - resume_from_checkpoint :None[2022-06-22 10:04:16,963] [ INFO] - run_name :test[2022-06-22 10:04:16,963] [ INFO] - save_on_each_node :False[2022-06-22 10:04:16,963] [ INFO] - save_steps :100[2022-06-22 10:04:16,963] [ INFO] - save_strategy :IntervalStrategy.STEPS[2022-06-22 10:04:16,963] [ INFO] - save_total_limit :2[2022-06-22 10:04:16,963] [ INFO] - scale_loss :32768[2022-06-22 10:04:16,963] [ INFO] - seed :42[2022-06-22 10:04:16,963] [ INFO] - should_log :True[2022-06-22 10:04:16,963] [ INFO] - should_save :True[2022-06-22 10:04:16,963] [ INFO] - train_batch_size :128[2022-06-22 10:04:16,963] [ INFO] - warmup_ratio :0.0[2022-06-22 10:04:16,963] [ INFO] - warmup_steps :0[2022-06-22 10:04:16,963] [ INFO] - weight_decay :0.0[2022-06-22 10:04:16,963] [ INFO] - world_size :1[2022-06-22 10:04:16,963] [ INFO] - [2022-06-22 10:04:16,964] [ INFO] - ***** Running training *****[2022-06-22 10:04:16,965] [ INFO] - Num examples = 17268[2022-06-22 10:04:16,965] [ INFO] - Num Epochs = 1[2022-06-22 10:04:16,965] [ INFO] - Instantaneous batch size per device = 128[2022-06-22 10:04:16,965] [ INFO] - Total train batch size (w. parallel, distributed & accumulation) = 128[2022-06-22 10:04:16,965] [ INFO] - Gradient Accumulation steps = 1[2022-06-22 10:04:16,965] [ INFO] - Total optimization steps = 67.5[2022-06-22 10:04:16,965] [ INFO] - Total num train samples = 8634.0 100%|| 67/67 [00:17<00:00, 4.40it/s][2022-06-22 10:04:34,326] [ INFO] - Training completed. {'train_runtime': 17.4487, 'train_samples_per_second': 494.821, 'train_steps_per_second': 3.84, 'train_loss': 0.352832708785783, 'epoch': 0.4963} 100%|| 67/67 [00:17<00:00, 3.84it/s][2022-06-22 10:04:34,416] [ INFO] - Saving model checkpoint to work/query_reply_pair/test[2022-06-22 10:04:36,894] [ INFO] - tokenizer config file saved in work/query_reply_pair/test/tokenizer_config.json[2022-06-22 10:04:36,895] [ INFO] - Special tokens file saved in work/query_reply_pair/test/special_tokens_map.json ***** train metrics ***** epoch = 0.4963 train_loss = 0.3528 train_runtime = 0:00:17.44 train_samples_per_second = 494.821 train_steps_per_second = 3.84[2022-06-22 10:04:36,898] [ INFO] - ***** Running Evaluation *****[2022-06-22 10:04:36,898] [ INFO] - Num examples = 4317[2022-06-22 10:04:36,898] [ INFO] - Pre device batch size = 128[2022-06-22 10:04:36,898] [ INFO] - Total Batch size = 128[2022-06-22 10:04:36,898] [ INFO] - Total prediction steps = 34 100%|| 34/34 [00:02<00:00, 12.31it/s] ***** eval metrics ***** epoch = 0.4963 eval_accuracy = 0.8826 eval_loss = 0.283 eval_runtime = 0:00:03.21 eval_samples_per_second = 1344.038 eval_steps_per_second = 10.585[2022-06-22 10:04:40,112] [ INFO] - ***** Running Prediction *****[2022-06-22 10:04:40,112] [ INFO] - Num examples = 53757[2022-06-22 10:04:40,112] [ INFO] - Pre device batch size = 128[2022-06-22 10:04:40,112] [ INFO] - Total Batch size = 128[2022-06-22 10:04:40,112] [ INFO] - Total prediction steps = 420 100%|| 420/420 [00:51<00:00, 11.37it/s]***** test metrics ***** test_runtime = 0:00:52.06 test_samples_per_second = 1032.496 test_steps_per_second = 8.067 100%|| 420/420 [00:52<00:00, 7.95it/s]登录后复制

训练结果可视化:

进入左侧菜单栏的数据模型可视化模块,选择logdir并启动后,即可直观了解训练过程中的loss曲线。

log 文件会保存在类似 work/query_reply_pair/runs/Jun22_10-01-49_jupyter-532817-4195533 这样的路径下面。

由于上面训练过程只跑了 0.5 个 epoch,曲线基本只有一个点,这里就不进行展示了。

测试集预测结果保存在 work/query_reply_pair/test/test_labels.tsv 文件中,你可以直接点开看一下结果。 In [7]

import pandas as pd file_path = "work/query_reply_pair/test/test_labels.tsv"df = pd.read_csv(file_path, sep='\t') df.head()登录后复制

query reply label 我在给你发套 0 您看下我发的这几套 0 这两套也是金源花园的 0 价钱低 0 便宜的房子,一般都是顶楼 0

生成竞赛提交文件: In [8]

import pandas as pd sample_submit_path = "data/data144231/sample_submission.tsv"submit_path = "submit.tsv"submit = pd.read_csv(sample_submit_path, sep='\t', header=None) submit.columns = ['query_id', 'reply_id', 'label'] test_labels = pd.read_csv(file_path, sep='\t') label = test_labels['label'] submit['label'] = label submit.to_csv(submit_path, sep='\t', header=None, index=None)登录后复制

根目录下 submit.tsv 文件就可以拿去提交啦,提交大概 0.75+ 的分数 (num_train_epochs = 5.0 的情况下)。

以上就是【AI达人特训营】PaddleNLP实现聊天问答匹配的详细内容,更多请关注其它相关文章!